Gabe Newell believes brain interfaces will create games 'superior' to reality 'fairly quickly'

Gabe Newell says the future of gaming may lie in brain-computer interface technology, or BCI for short. In an interview with 1 NEWS, a television network in Newell's home away from home, New Zealand, the co-founder of Valve talks about his vision of what gaming might look like once powered by BCI tech and it sure sounds immersive at a level us mere mortals could hardly imagine—or existentially scary. Take your pick.

Valve is currently working on an open-source BCI software project, he tells 1 NEWS, which would allow game developers to begin interpreting the signals within a players head while they play a game.

"We're working on an open source project so that everybody can have high-resolution read technologies built into headsets, in a bunch of different modalities." Newell says.

That sounds harmless enough, in a way. A handful of companies have been attempting to use player response for quite some time now, all with a view to adjusting the gaming experience to how the player reacts. Bored? Here's a more exciting encounter. Getting tired? Here's a jump scare.

Monitoring motor function and signals can also act as an input device, those which could help reduce or remove the delay between player call and game response. One such device was the Neural Impulse Actuator from OCZ, which is over a decade old and has long been listed as end-of-life.

But it goes deeper than that, Newell says, and he's clearly an avid fan of BCI technology. Back in March 2020 he told IGN that he thought "connecting to people's motor cortex and visual cortex is going to be way easier than people expected," and that it was merely a process of learning what things work, what things don't. What turns out to be worthwhile and what turns out to be "party tricks".

My guess is we're still not much closer to answering any of these questions as of today.

Yet Newell does expand on what may come to pass, and that it's in the editing of these signals. He says that gaming could become far more immersive than anything even possibly imaginable with our 'meat peripherals', as he eloquently terms human organs and limbs—a phrase he uses with an odd fluency.

"Our ability to create experiences through peoples' brains that are not mediated through their meat peripherals will actually be better than is possible," Newell says. "So you're used to experience the world through eyes, but eyes were created by this low-cost bidder who didn't care about failure rates and RMAs, and if it got broken there was no way of fixing it, effectively.

"Totally makes sense from an evolutionary perspective, but is not at all reflective of consumer preferences."

Eerie stuff.

For gaming, Newell believes you could edit visual stimuli to appear at a higher level of fidelity and immersion. As he puts it, and this is where things get doubly dread-filled.

"The real world will stop being the metric that we apply to the best possible visual fidelity, and instead it's like the real world will seem flat, colourless, blurry, compared to the experiences that you'll be able to create in peoples' brains."

To continue his trend of quoting Keanu Reeve's movies, Newell goes on to explain that "it's like The Matrix said: 'Oh and we'll create something that's like reality' and I think with BCIs, fairly quickly, we'll be able to create experiences that are superior to that."

There are even quite a few similarities to what Newell says and the world within Cyberpunk 2077—yet another Reeve's reference.

That's not even the weird bit, though. Things get weird when humans' themselves become editable, which Newell also considers a possible use for BCI.

"Once you can start editing you start having these feedforward and feedback loops in terms of who you want to be, which is a weird thing to talk about."

Emphasis on 'weird' from Gabe there.

It seems even Newell understands this is a strange concept, but sees that outcome as an inevitability with BCI tech, that which is just in its early stages today, including within Valve's labs.

"With BCI and with what's coming in terms of neuroscience, the experiences that you'll have will be things that are curated and edited for you. Like one of the early applications I expect we'll see is improved sleep. It's like sleep will now become an app that you run."

As for today, Newell says that BCI could be turned to fend off vertigo in VR headsets, and "it's more of a certification issue" that's preventing that from happening today. Valve is working with OpenBCI headsets to get something out in order to help generate further interest from software developers on how to use BCI today.

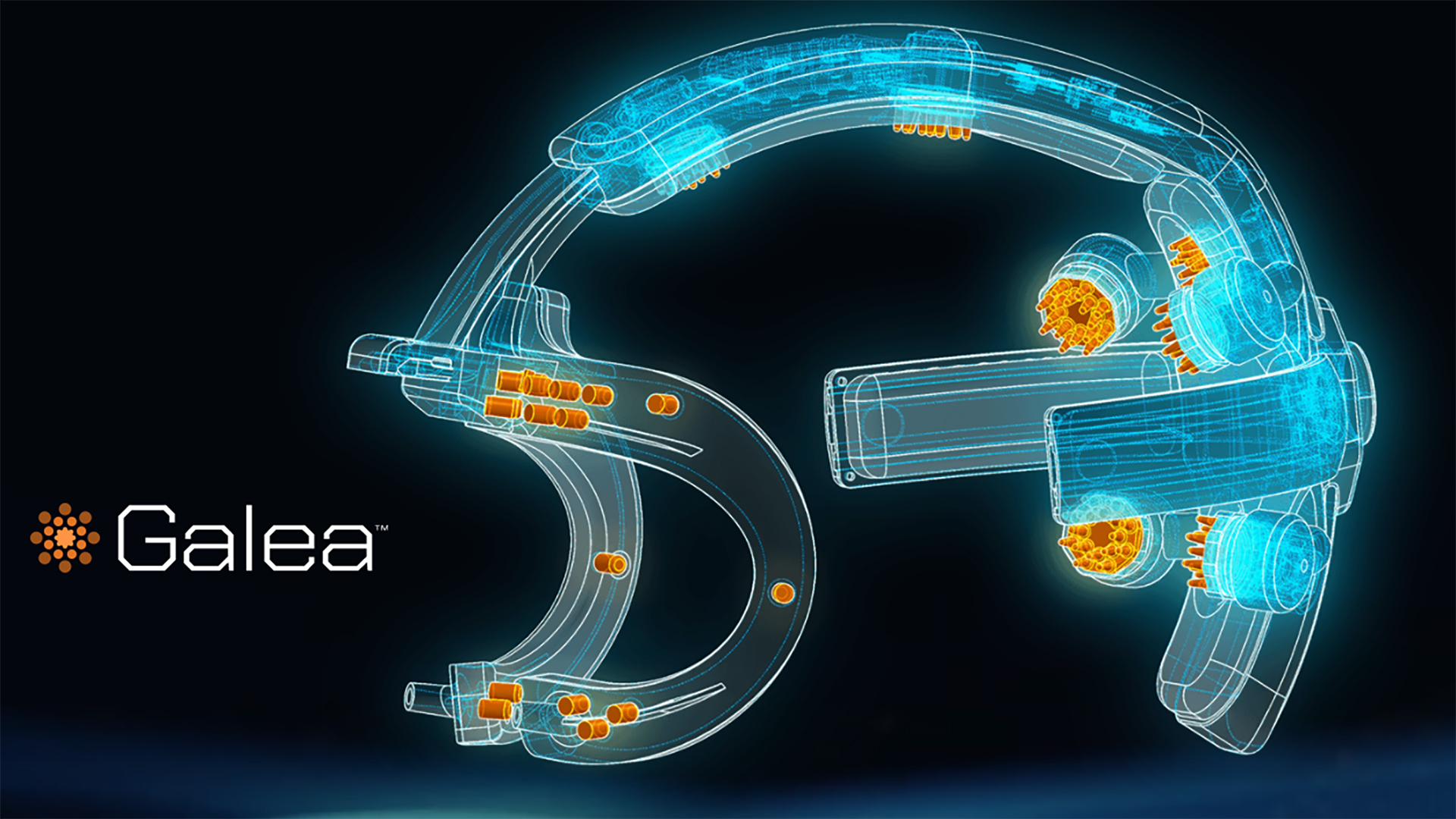

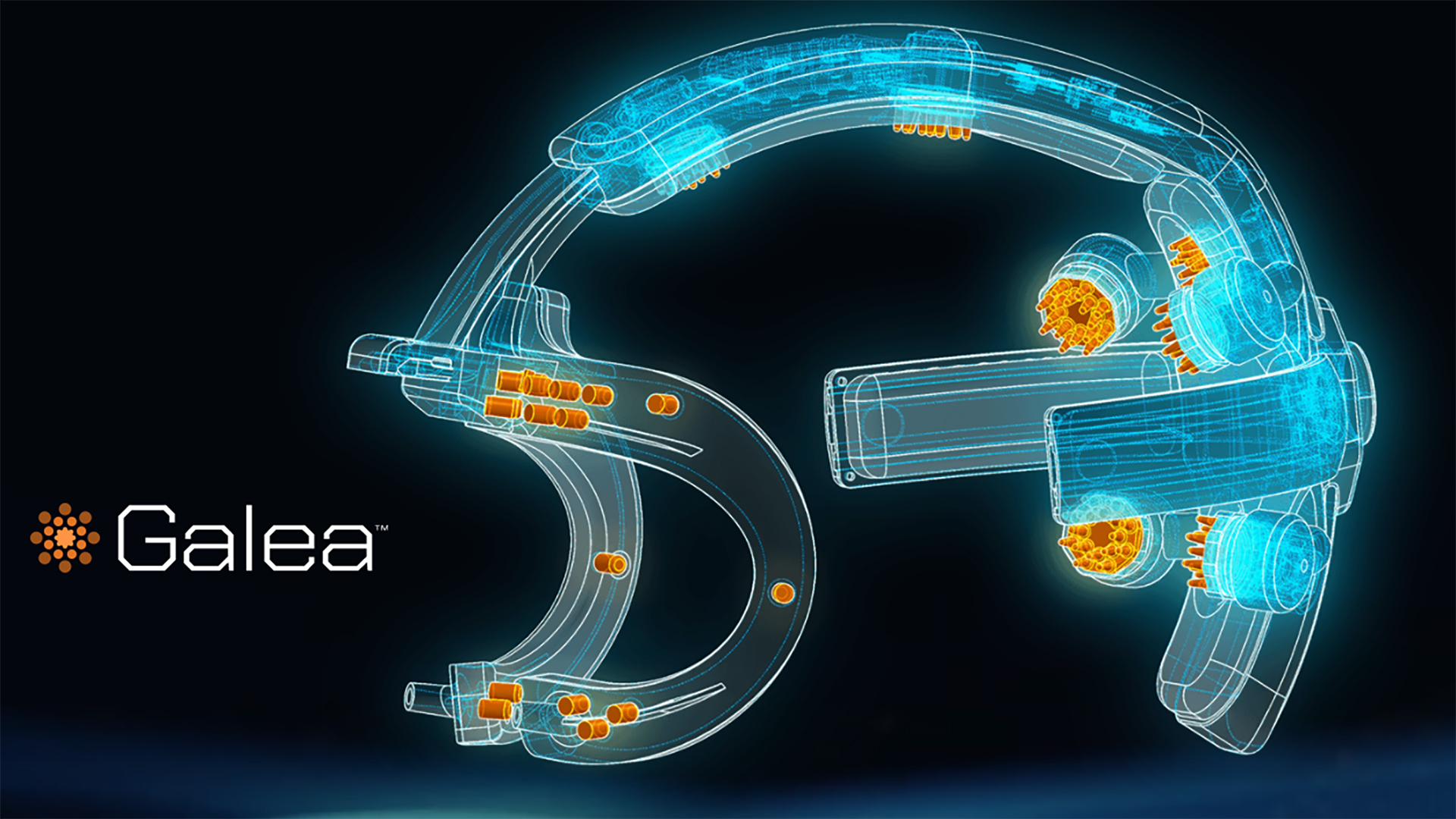

The Galea BCI headset strap is one such design, an open-source device currently still in beta, and with very little public information known about it, that enables use of a BCI device with a range of VR headsets, including the Valve Index.

These devices are read-only—essentially data gathering devices that allow a developer greater access to a player's mind and emotions.

That of course turns up other issues with BCI, such as possible privacy concerns. Recently Facebook has been criticised for requiring a Facebook login and connected account in order to use its Oculus VR headsets. Such a move means those who are, understandably, without a FB account now need to sign up. But with such BCI devices there may then be concerns over the type of biometric and environmental data that could not only identify a user but also offer up more information about themselves than they would understand, expect, or want the data collector to have.

A BCI device, surely, is like offering up even more of said biometric data on a silver platter.

"People are going to have to have a lot of confidence that these are secure systems that don't have long term health risks," suggests Newell.

Yet there are also wide-ranging uses for BCI technology that could genuinely do a whole lot of good. Prosthetic limbs could become an extension of someone's brain in a way not unlike how someone may naturally operate a limb, and Newell says that game engines are particularly useful for creating simulations in order for one to better use that technology.

"Obviously Valve is not in the business of creating virtual prosthetics for people… but this is what we're contributing to this particular research project, and because of that we have access to leaders in the neuroscience field who teach us a lot about the neuroscience side."

A complex topic, no doubt, and for the pre-eminent videogame billionaire perhaps something of a passion project. It's certainly something I'm initially hesitant of, though, not only for the wider uses and impact but the possibility of it all coming to pass as Gabe envisions—I'm sure many others will be, too.

Which leaves me with one question to you reading this: If you could use a BCI device today that changed your very perception, in order to play more immersive and impressive games, would you do it?

from PCGamer latest https://ift.tt/2YdaSPG

Gabe Newell says the future of gaming may lie in brain-computer interface technology, or BCI for short. In an interview with 1 NEWS, a television network in Newell's home away from home, New Zealand, the co-founder of Valve talks about his vision of what gaming might look like once powered by BCI tech and it sure sounds immersive at a level us mere mortals could hardly imagine—or existentially scary. Take your pick.

Valve is currently working on an open-source BCI software project, he tells 1 NEWS, which would allow game developers to begin interpreting the signals within a players head while they play a game.

"We're working on an open source project so that everybody can have high-resolution read technologies built into headsets, in a bunch of different modalities." Newell says.

That sounds harmless enough, in a way. A handful of companies have been attempting to use player response for quite some time now, all with a view to adjusting the gaming experience to how the player reacts. Bored? Here's a more exciting encounter. Getting tired? Here's a jump scare.

Monitoring motor function and signals can also act as an input device, those which could help reduce or remove the delay between player call and game response. One such device was the Neural Impulse Actuator from OCZ, which is over a decade old and has long been listed as end-of-life.

But it goes deeper than that, Newell says, and he's clearly an avid fan of BCI technology. Back in March 2020 he told IGN that he thought "connecting to people's motor cortex and visual cortex is going to be way easier than people expected," and that it was merely a process of learning what things work, what things don't. What turns out to be worthwhile and what turns out to be "party tricks".

My guess is we're still not much closer to answering any of these questions as of today.

Yet Newell does expand on what may come to pass, and that it's in the editing of these signals. He says that gaming could become far more immersive than anything even possibly imaginable with our 'meat peripherals', as he eloquently terms human organs and limbs—a phrase he uses with an odd fluency.

"Our ability to create experiences through peoples' brains that are not mediated through their meat peripherals will actually be better than is possible," Newell says. "So you're used to experience the world through eyes, but eyes were created by this low-cost bidder who didn't care about failure rates and RMAs, and if it got broken there was no way of fixing it, effectively.

"Totally makes sense from an evolutionary perspective, but is not at all reflective of consumer preferences."

Eerie stuff.

For gaming, Newell believes you could edit visual stimuli to appear at a higher level of fidelity and immersion. As he puts it, and this is where things get doubly dread-filled.

"The real world will stop being the metric that we apply to the best possible visual fidelity, and instead it's like the real world will seem flat, colourless, blurry, compared to the experiences that you'll be able to create in peoples' brains."

To continue his trend of quoting Keanu Reeve's movies, Newell goes on to explain that "it's like The Matrix said: 'Oh and we'll create something that's like reality' and I think with BCIs, fairly quickly, we'll be able to create experiences that are superior to that."

There are even quite a few similarities to what Newell says and the world within Cyberpunk 2077—yet another Reeve's reference.

That's not even the weird bit, though. Things get weird when humans' themselves become editable, which Newell also considers a possible use for BCI.

"Once you can start editing you start having these feedforward and feedback loops in terms of who you want to be, which is a weird thing to talk about."

Emphasis on 'weird' from Gabe there.

It seems even Newell understands this is a strange concept, but sees that outcome as an inevitability with BCI tech, that which is just in its early stages today, including within Valve's labs.

"With BCI and with what's coming in terms of neuroscience, the experiences that you'll have will be things that are curated and edited for you. Like one of the early applications I expect we'll see is improved sleep. It's like sleep will now become an app that you run."

As for today, Newell says that BCI could be turned to fend off vertigo in VR headsets, and "it's more of a certification issue" that's preventing that from happening today. Valve is working with OpenBCI headsets to get something out in order to help generate further interest from software developers on how to use BCI today.

The Galea BCI headset strap is one such design, an open-source device currently still in beta, and with very little public information known about it, that enables use of a BCI device with a range of VR headsets, including the Valve Index.

These devices are read-only—essentially data gathering devices that allow a developer greater access to a player's mind and emotions.

That of course turns up other issues with BCI, such as possible privacy concerns. Recently Facebook has been criticised for requiring a Facebook login and connected account in order to use its Oculus VR headsets. Such a move means those who are, understandably, without a FB account now need to sign up. But with such BCI devices there may then be concerns over the type of biometric and environmental data that could not only identify a user but also offer up more information about themselves than they would understand, expect, or want the data collector to have.

A BCI device, surely, is like offering up even more of said biometric data on a silver platter.

"People are going to have to have a lot of confidence that these are secure systems that don't have long term health risks," suggests Newell.

Yet there are also wide-ranging uses for BCI technology that could genuinely do a whole lot of good. Prosthetic limbs could become an extension of someone's brain in a way not unlike how someone may naturally operate a limb, and Newell says that game engines are particularly useful for creating simulations in order for one to better use that technology.

"Obviously Valve is not in the business of creating virtual prosthetics for people… but this is what we're contributing to this particular research project, and because of that we have access to leaders in the neuroscience field who teach us a lot about the neuroscience side."

A complex topic, no doubt, and for the pre-eminent videogame billionaire perhaps something of a passion project. It's certainly something I'm initially hesitant of, though, not only for the wider uses and impact but the possibility of it all coming to pass as Gabe envisions—I'm sure many others will be, too.

Which leaves me with one question to you reading this: If you could use a BCI device today that changed your very perception, in order to play more immersive and impressive games, would you do it?

via IFTTT

Post a Comment